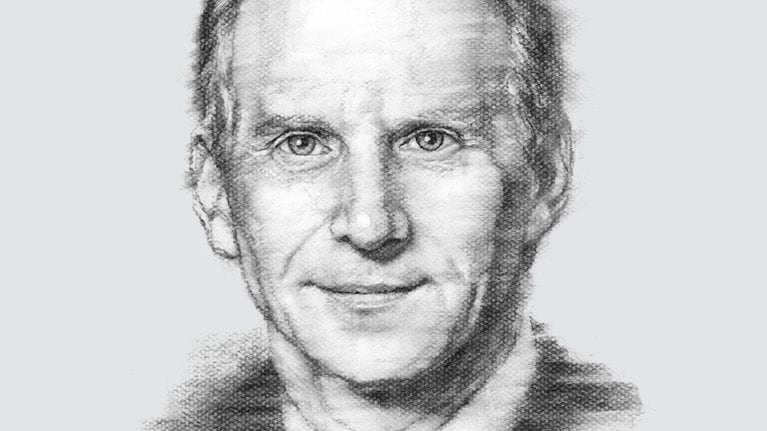

Kenneth Knight describes his job as helping the president of the United States and his administration “avoid surprise.” As the national intelligence officer for warning, Knight oversees a small team of analysts who serve as an institutionalized safeguard against risk—monitoring and challenging the analyses and assumptions of the broader intelligence community. In this interview, he discusses evaluating threats, overcoming cognitive biases, and constructing scenarios—challenges familiar to most private-sector strategists. McKinsey’s Drew Erdmann and Lenny Mendonca spoke with Knight in Washington, DC, in June 2009.

How complicated has the task of understanding threats become?

I think for me it’s been kind of reflective of the changes in the international security environment. Our warning system came into being during the Cold War timeframe. And it was focused on what I would call static, more military-oriented problems, where you could define the bad outcome ahead of time and kind of monitor against that—country A invading country B.

I think in today’s world, that’s a necessary but not sufficient part of the overall warning mission. We’ve got a tremendous number of issues that don’t lie within a nation state, that don’t involve a military or defense-related kind of background. So trying to transform that system to be more adept, more dynamic, more able to deal [with] and anticipate emerging challenges in this global environment we’re in today, I think, is the hardest thing, because it’s not an organizational change as much as it is a mind-set and focus change.

How do you decide which things you need to warn about?

You have to have a sense of humor to be in this business, I think. And I am very aware of denigrating the warnings I do provide—or our community does provide—by warning about everything. We generally try to run through a couple of rule-of-thumb assessments internal to our staff and then with the broader community.

The first is kind of a simple likelihood-of-the-event versus impact-of-the-event calculation. I’m much more willing to wait and not warn about a medium- or low-impact event until I’m more certain that it is going to happen and have more evidence supporting it. And in those cases, your opportunity for being wrong or for crying wolf is probably lower—or the chance that you’re missing something is probably lower—because you’re waiting until later in the game.

I think we’ve struck a decent balance between not missing major things and not overwarning and crying wolf. But that’s, I think, for others to judge. I still have my job after five years, so I guess it’s not been too bad.

You work with lots of experts. What do you do to overcome cognitive biases that intrude?

This is a very big concern for people in the warning business. I mentioned I have a small staff. It’s six or seven people covering the entire world, so we’re not the expert on any issue that we engage on. We are very aware of the research pointing toward expert bias, and I think that puts us and the experts in kind of a difficult position a lot of times.

Most experts become experts and rise to the top of our community because they have a very good analytic framework for looking at their issue. That allows them to process lots of information, put it in some kind of strategic context, and say something relevant and useful to the policy people.

A lot of times surprises occur when those analytic frameworks that the experts have—and have built their career on, and have built their experience on—no longer apply. And so we are constantly kind of pushing that and causing tension, where a nonexpert from my office is engaging with an expert and challenging their expert bias. I think the ways to get around that: there’s really two. And we’ve tried in both areas, and I think have made progress in both areas.

The first is training. You can train individual analysts and expose them to the research that you mentioned in your question. Try to make them cognizant of their own potential biases. Give them tools for challenging their own assumptions, for doing kind of competing alternative analyses. Do things internally to their organizations, such as conduct peer reviews. Try to get their managers to embrace some of these ideas also. And we’ve done a lot over the last five or six years to try to train the workforce—all analysts—in those kinds of techniques and analytic procedures methodologies.

I think the second thing is really what my organization represents. You can assume the analyst will always have some kind of pathologies and create then an institutional check—so a warning staff that has the mission of challenging those. We have a number of organizations that have some kind of function along those lines, whether it’s red teams, or red cells, or alternative analysis groups. And I think that’s fairly successful.

If there’s a third way, I’d be happy to hear about that because this expert-bias notion and the fact that you’re asking an expert in a crisis to maybe challenge or abandon an analytic framework that has served them well, I mean it just goes against human nature, especially with the time and pressure that you have in a crisis situation.

How do you strike the balance between short- and long-term analysis?

I always use the analogy: my father was a DC policeman. And some of this is about the difference between a stakeout, where you’re watching some known activity that you’re interested in—say country A invading country B—and more walking the beat, where you’re going through your neighborhood looking for signs that don’t look right to you. Maybe it’s anomalies, maybe it’s some kind of activity that is new, and potentially maybe you just want to understand it.

But that balance between the horizon scanning—walking the beat—and then the standing warning issues, where you know you’re concerned and want to watch, there’s a range of analytic techniques. And I think one size doesn’t fit all there.

I feel much better about the, what I would call, enduring warning concerns—the stakeout problems, where I know to watch, and we built an analytic process, and we watch the area, and we typically have designated people watching it. I think we can still get surprised there, but those I feel pretty good about. It’s the emerging issues that we didn’t imagine or didn’t know to watch. And that’s what my staff is trying to push and engage and uncover out of that tremendous daily and weekly, monthly product that our community churns out.

And it is, again, the sense of humor thing I think is important. I mean, I started out by saying prevents strategic surprise. Well, we know they are going to be surprises. And, in fact, my predecessor said, “There are going be surprises on your watch, and if the surprise is big enough, then they’ll look for another person to take your job.” I think something that I have embraced that I tell my people is, you have to be used to being the dumbest guy in the room. And when we work an issue—it could be China today, it could be technology tomorrow, it could be Iran the day after that—we literally are the dumbest people in the room, because we’re dealing with a community of experts who have studied the issue for a long time. I think that’s kind of a humbling way to keep yourself fresh.

What about the economic crisis?

The importance of overall economic issues and trends and developments, and the national security import of those, I think, is one that we’re going to have to acclimate ourselves to from the intelligence community’s perspective. Because we’ve always had people paying attention to the economy, but we probably put a higher premium on state-centric defense, foreign-policy security—so kind of ministries of foreign affairs and ministries of defenses in leaderships. I think the nongovernmental, nonstate dimension, where so much of what is driving change in the world doesn’t necessarily happen in a capital or doesn’t happen with an organizational entity that we understand very well or have the levers to engage very well.

What advice would you give leaders in the private sector about how to approach risk analysis?

It’s funny you’d ask that, because I’m intimidated by people that try to figure out Wall Street. You know it can’t be any easier, I would think. But what I found is, trying to take systematic analytic approaches where you are constantly engaging yourself to try to determine: what do you think is most important that you’re trying to accomplish? What do you most want to avoid?

And then, from my perspective, looking at the kind of developments—whether they’re political, economic, or social, military—that could some way play on either frustrating what you’re trying to accomplish or provide an opportunity. So, to me, it’s this constant articulation of what are you trying to accomplish? What are you trying to avoid? And then a conscious examination of the world and the information you have in front of you to try to, from a possibilities-based perspective, imagine how this information and the situation you see developing could [make an] impact on those things you’re trying to accomplish.

I’m a big fan of the risk approach to my job. Too often I think our analysts try to make an either–or call. So, you know, leader A is going to be removed from power or he’s not. And again, since nobody wants to be wrong, when you’re down to that either–or approach I think you are waiting until the confirming evidence is overwhelming. So I’ve encouraged people in the warning business to think more in terms of, “Is the risk increasing or decreasing? Is there a strategic opportunity unfolding?”—to think more from a possibilities- than an evidenced-based approach.

How much does experience play into your approach?

I’ve been in this business 30 years. I would say almost everything I learned in the first 25 years is challengeable. And so to believe that expertise comes with longevity, to believe that my successful past model has always got me through and therefore will continue to get me through, to believe that that analytic framework and my metric system—what I use to value in terms of information sources or in terms of the credibility of my analytic process—to assume that the way that was 5 years ago is the way it is today or will be that way 5 years in the future, I just think gets us into trouble.

I think if you get far enough in the future, you might believe that the system that you have in ten years or so is somewhat unrecognizable compared to where we are today or where we were five years ago. But I just think this constant need to look at your own business model or your own, in our case, intelligence model, and to look at what are the baseline assumptions that you have that that model rests on and to challenge those—and not just in a check-the-box way, not just do an alternative assessment at the end of your baseline analysis and reconfirm your analysis, but to truly challenge them—to me it’s the only way, I think, to stay current and perhaps stay ahead of the curve.

How do you construct scenarios?

I would say that not all analytic problems are the same. So, if you’d allow me, I think the kinds of issues we’re trying to deal with fall on a spectrum from the known knowable to the more complex chaotic. I think it was Joe Nye, when he was chairman of the National Intelligence Councils, I think he described this as mysteries and secrets, which is not a bad shorthand.

I think the second thing for us is, I try to imagine the perfect information that I would like to have if I were going to address my issue with high confidence, and then I compare that to the information that I either have or I’m likely to be able to get.

I think a third thing for us is to think about the consumer that we’re actually engaging—and what is the risk tolerance of that person? What kind of decision metrics do they have? What kind of decision are we actually trying to support? It’s different if it’s a military commander with troops in the field being killed than if it’s someone who’s doing long-range strategic planning. And then a fourth thing, that’s kind of the tyranny for everyone, is time. I mean, you can think about these things a lot, but if you have to answer the question tomorrow morning, you kind of fight kind of with who you have.