By applying advanced and predictive analytics up front, companies can build R&D plans they can stick to, for shorter lead times and better cost discipline.

R&D projects are inherently unpredictable. When embarking on efforts to design complex things, companies often have little idea how long a project will take, what it will cost, or what they'll finally be able to deliver to their customers. Initial project plans are sometimes no more than educated guesswork.

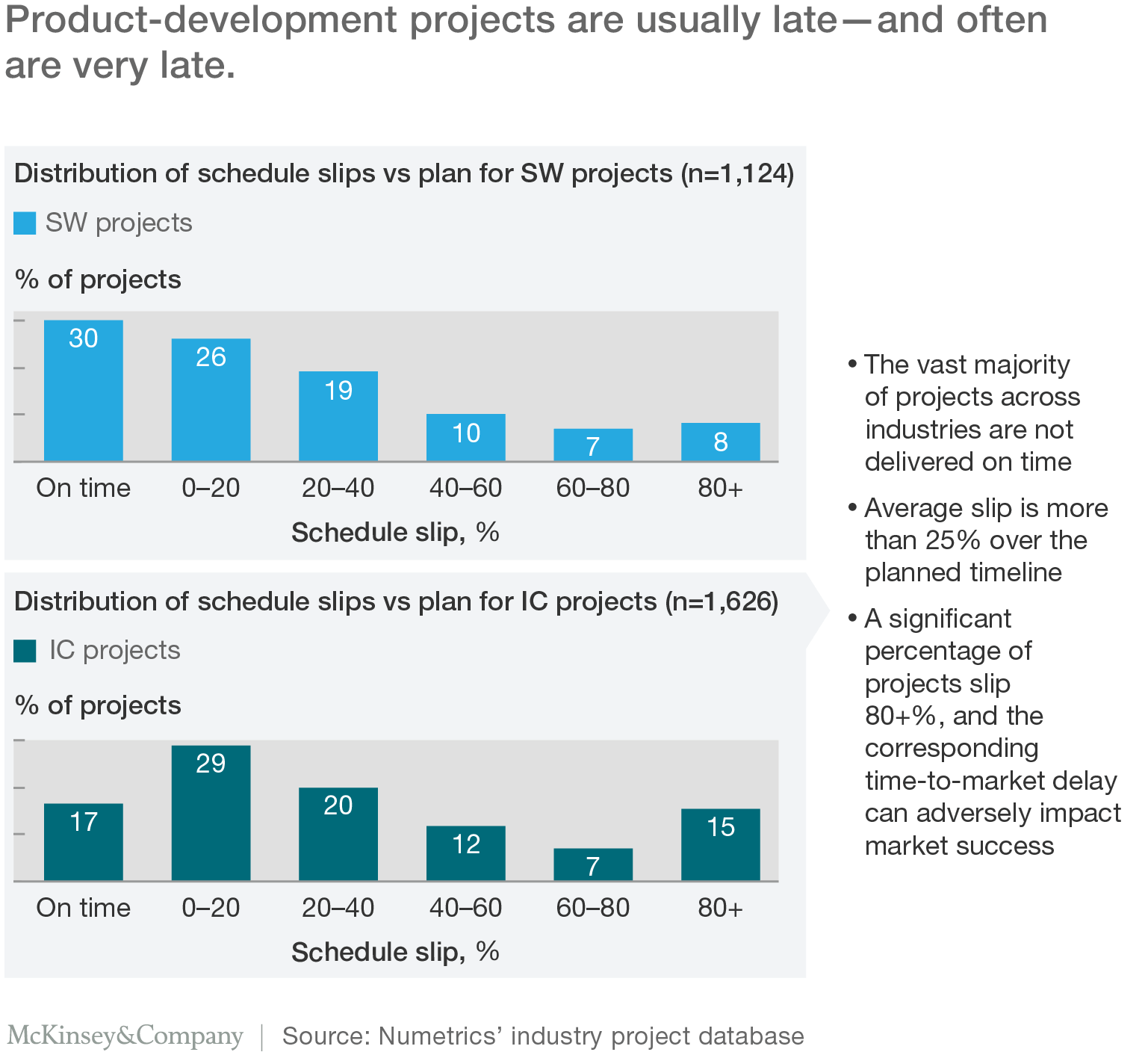

In an analysis of more than 1,100 software projects, for example, we found that only 30 percent met their original delivery deadline, with an average overrun of around 25 percent. Even more telling, out of 1,600 integrated-circuit (IC) design projects, more than 80 percent were late, and the average overrun was about 30 percent. Moreover, those projects were almost as likely to suffer an 80 percent overrun as they were to finish on time, showing just how unpredictable development can be (Exhibit 1).

Delays, and the extra resources needed to counter them, mean higher costs too. For a group of factory-automation software projects, we found an average budget overrun of more than 10 percent, spiking to more than 50 percent for about one- fifth of the group. Then there are the indirect costs. Delayed launches mean lost sales, unnecessary openings for competitors to get ahead, and potentially long-lasting damage to reputations.

The underlying causes of overruns

We've spent more than a decade investigating the root cause of R&D scheduling challenges. In that time, we've interviewed hundreds of project stakeholders, including executive managers, technical leaders, and program and project managers. They highlight two primary issues.

The first issue: underestimating project complexity. Managers and engineering teams are often surprised by the combined impact of all the features and performance targets, and the cost of integrating all of the requirements into a finished product. The second, related, issue is overestimating the development team's productivity. Planners tend to assume that the issues that befell their previous project would be cured and that no new issues would crop up. They assume that over the course of the project, specifications will not change, and resources will be available when needed. In practice, of course, such problems affect almost every project.

How predictive analytics can help

Until recently, even companies that understood and sought to address these issues didn't have effective tools for doing so. Conventional complexity metrics, like counting lines of code or function points in software development, are difficult to estimate before the start of a project. And they are affected by unrelated factors, like the development team's programming style.

Today, some companies are adopting a new approach, one that uses powerful data-analysis and -modelling techniques to bring new clarity to the estimation of project-resource requirements. The new concept recognizes that, while every development project is unique, complexity drivers across projects are similar—and can be quantified. By understanding the complexity involved in a new project, companies can estimate the effort and resources required to complete it.

Which factors matter?

But companies must collect a significant amount of data to determine which factors really affect project effort. Moreover, for practical reasons, the only useful factors are the ones that can be easily measured and consistently gathered.

In developing a set of models, teams apply an array of advanced-analytics, machine-learning and artificial-intelligence techniques to a robust data set that incorporates technical specifications, staffing levels, timelines, and related team-environment factors from thousands of completed industry projects.

In order to estimate and predict the complexity and required development effort in a reliable way, the models must accommodate variables that are themselves highly complex. For example, in integrated-circuit-chip design, factors such as voltage, frequency, and crosstalk affect one another in nonlinear, contingent ways, so the model must be able to deal with nonlinearity and collinearity. Because variables' behaviour may be highly context-dependent as well, the model must treat these variable differently depending on, say, the specific type of product being developed.

And the human factors are highly dynamic. Major changes, such as the redesign of the development process, will have a substantial impact on a project's time requirements. Conversely, as a team builds its capabilities, factors that once had a major effect on complexity diminish in importance.

The most sophisticated models incorporate all of these subtleties. And, because they are based on real data, these newer complexity models don't make unrealistic assumptions about productivity. Their estimates automatically incorporate the effects of the everyday delays and disruptions that development teams must face. In other words, they don't just consider the complexity of the project but also the complexity of the team environment.

These models can even identify the productivity impact of changes to working methods. Larger development teams are less productive than small ones, for example, as they must expend more effort on internal coordination and communication. The introduction of new teams, new platforms, or new development approaches can also hit productivity in the short term, even if they are intended to boost it over the long haul. With enough industry data, however, the models can see how these sorts of changes affected productivity in the past, to provide a good estimate of likely future effects.

Better plans, smarter decisions

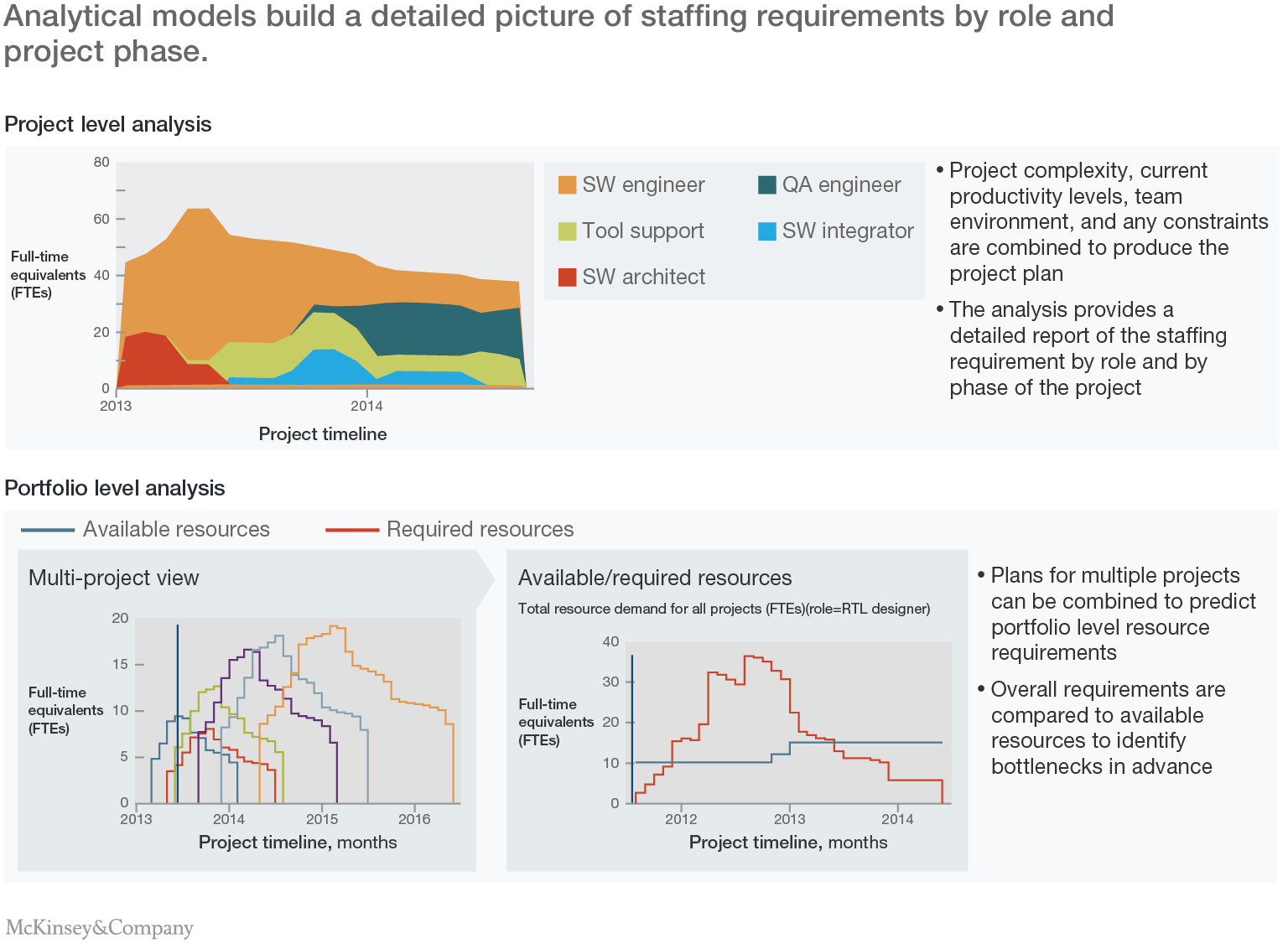

Armed with such models, and a baseline of their productivity levels for similar projects, a company can enter the current specification and create a plan for a new project. It can then assess the risk that the plan will overrun the initial timing and cost estimates, or create a more realistic staffing plan along with a good budget estimate and an achievable schedule (Exhibit 2).

In addition, analytical models provide a powerful new way to deal with constraints. For example, a company can model the resource requirements of multiple projects that scheduled to run concurrently, to see if there are any points where those projects will demand more staff than are available for a specific role. With adequate notice of such shortages, managers can take appropriate action—increasing staff numbers, adjusting schedules to reduce peaks in demand, hiring short-term support, or outsourcing part of the work.

If these changes aren't enough to make an aggressive budget or timeline achievable, the company can run what-if analyses to evaluate the impact of dropping certain features or simplifying performance requirements. That allows for a more thoughtful, fact-based discussion—an outcome that's far preferable to missed deadlines or dropping features at the last minute because they weren't finished in time for launch.

Predictive analytics at work

Predictive analytics have already have transformed the outcomes for several high-value projects, including at a semiconductor company planning a derivative of a newly released product. Planners originally expected the project to take just 300 person-weeks of effort, reasoning that 90 percent of the new design would carry over from its predecessor. When they re-evaluated the plan using the analytics-based models, however, the planners found that the project would actually take three or four times as much effort. While the amount of truly new work was small, it was widely distributed and affected nearly every part of the chip—necessitating significant extra testing and integration work. That insight led senior leaders to keep the team that developed the original product together to work on the derivative, enabling efficiencies that ultimately led to on-time delivery.

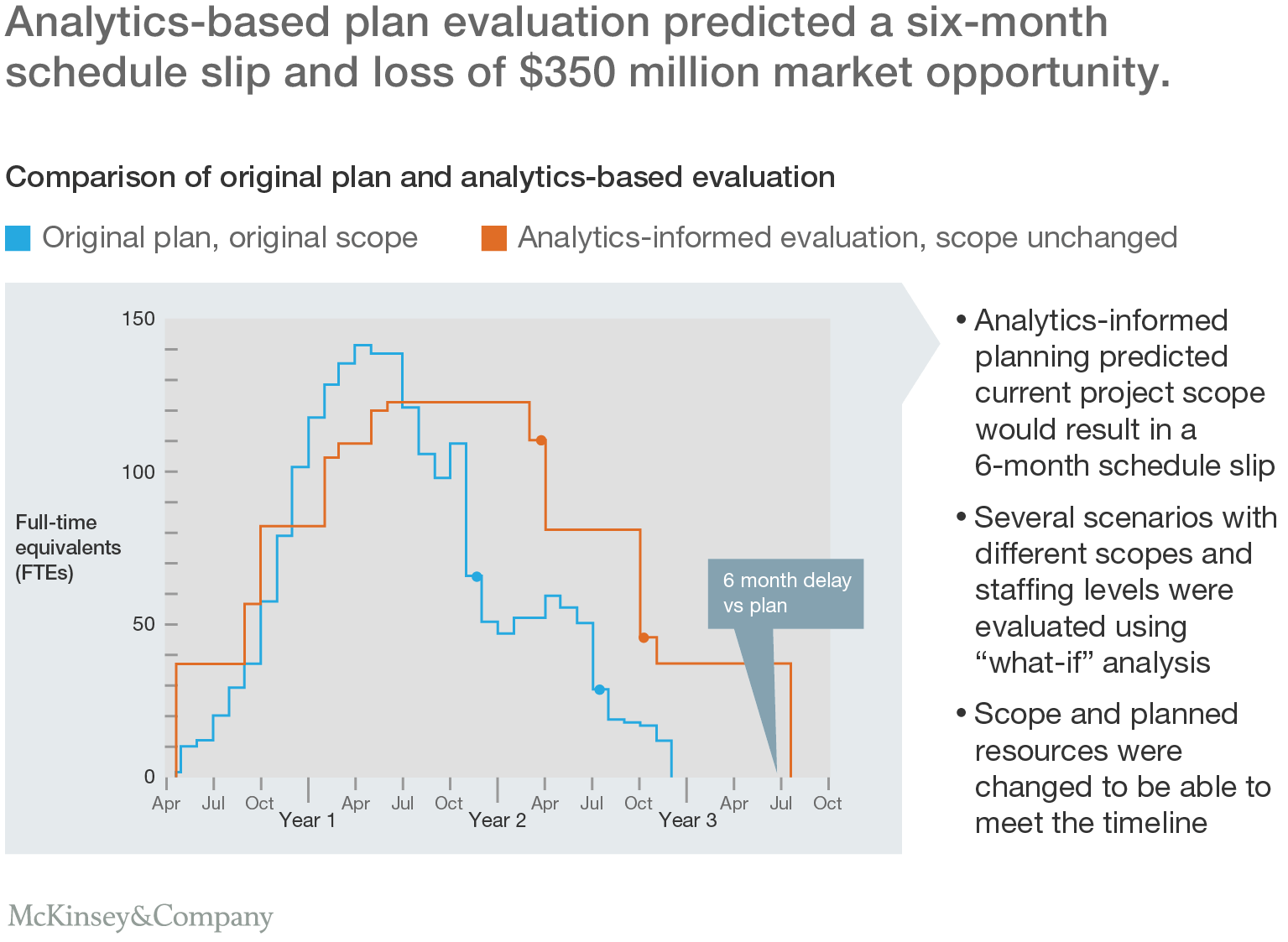

In another example, a company had a tight deadline to complete a new release for a big customer, with competitors vying for the work. The predictive-analytics models showed that the original resource levels and project plan would result in delivery some six months late—missing a market window and a $350 million opportunity. Spurred into action by the finding, the company took steps to reduce the complexity of its design, resulting in a project that met the customer's minimum requirements and could be delivered on time.

* * *

Organizations that apply analytics and predictive tools to their product-development and project-planning processes see a dramatic reduction in schedule slippage. And because they can put the right number of the right people on their projects at the right time, they also see R&D productivity improvements of 20 to 40 percent. That means lower costs and lower risks—a powerful combination in a highly competitive environment

About the authors: Ori Ben-Moshe, a Solutions General Manager for Numetrics, is based out of McKinsey’s Chicago office, where Alex Silbey is a Numetrics senior R&D expert; Shannon Johnston, a Numetrics R&D expert, is based out of the Toronto office, and Dorian Pyle is a Numetrics data expert in the Washington, DC office.