How confident are you that your analytics initiative is delivering the value it’s supposed to?

Stay current on your favorite topics

These days, it’s the rare CEO who doesn’t know that businesses must become analytics-driven. Many business leaders have, to their credit, been charging ahead with bold investments in analytics resources and artificial intelligence (AI). Many CEOs have dedicated a lot of their own time to implementing analytics programs, appointed chief analytics officers (CAOs) or chief data officers (CDOs), and hired all sorts of data specialists.

However, too many executives have assumed that because they’ve made such big moves, the main challenges to becoming analytics-driven are behind them. But frustrations are beginning to surface; it’s starting to dawn on company executives that they’ve failed to convert their analytics pilots into scalable solutions. (A recent McKinsey survey found that only 8 percent of 1,000 respondents with analytics initiatives engaged in effective scaling practices.) More boards and shareholders are pressing for answers about the scant returns on many early and expensive analytics programs. Overall, McKinsey has observed that only a small fraction of the value that could be unlocked by advanced-analytics approaches has been unlocked—as little as 10 percent in some sectors. And McKinsey’s AI Index reveals that the gap between leaders and laggards in successful AI and analytics adoption, within as well as among industry sectors, is growing (Exhibit 1).

That said, there’s one upside to the growing list of misfires and shortfalls in companies’ big bets on analytics and AI. Collectively, they begin to reveal the failure patterns across organizations of all types, industries, and sizes. We’ve detected what we consider to be the ten red flags that signal an analytics program is in danger of failure. In our experience, business leaders who act on these alerts will dramatically improve their companies’ chances of success in as little as two or three years.

1. The executive team doesn’t have a clear vision for its advanced-analytics programs

In our experience, this often stems from executives lacking a solid understanding of the difference between traditional analytics (that is, business intelligence and reporting) and advanced analytics (powerful predictive and prescriptive tools such as machine learning).

To illustrate, one organization had built a centralized capability in advanced analytics, with heavy investment in data scientists, data engineers, and other key digital roles. The CEO regularly mentioned that the company was using AI techniques, but never with any specificity.

Would you like to learn more about McKinsey Analytics?

In practice, the company ran a lot of pilot AI programs, but not a single one was adopted by the business at scale. The fundamental reason? Top management didn’t really grasp the concept of advanced analytics. They struggled to define valuable problems for the analytics team to solve, and they failed to invest in building the right skills. As a result, they failed to get traction with their AI pilots. The analytics team they had assembled wasn’t working on the right problems and wasn’t able to use the latest tools and techniques. The company halted the initiative after a year as skepticism grew.

First response: The CEO, CAO, or CDO—or whoever is tasked with leading the company’s analytics initiatives—should set up a series of workshops for the executive team to coach its members in the key tenets of advanced analytics and to undo any lingering misconceptions. These workshops can form the foundation of in-house “academies” that can continually teach key analytics concepts to a broader management audience.

2. No one has determined the value that the initial use cases can deliver in the first year

Too often, the enthusiastic inclination is to apply analytics tools and methods like wallpaper—as something that hopefully will benefit every corner of the organization to which it is applied. But such imprecision leads only to large-scale waste, slower results (if any), and less confidence, from shareholders and employees alike, that analytics initiatives can add value.

That was the story at a large conglomerate. The company identified a handful of use cases and began to put analytics resources against them. But the company did not precisely assess the feasibility or calculate the business value that these use cases could generate, and, lo and behold, the ones it chose produced little value.

First response: Companies in the early stages of scaling analytics use cases must think through, in detail, the top three to five feasible use cases that can create the greatest value quickly—ideally within the first year. This will generate momentum and encourage buy-in for future analytics investments. These decisions should take into account impact, first and foremost. A helpful way to do this is to analyze the entire value chain of the business, from supplier to purchase to after-sales service, to pinpoint the highest-value use cases (Exhibit 2).

To consider feasibility, think through the following:

- Is the data needed for the use case accessible and of sufficient quality and time horizon?

- What specific process steps would need to change for a particular use case?

- Would the team involved in that process have to change?

- What could be changed with minimal disruption, and what would require parallel processes until the new analytics approach was proven?

3. There’s no analytics strategy beyond a few use cases

In one example, the senior executives of a large manufacturer were excited about advanced analytics; they had identified several potential cases where they were sure the technology could add value. However, there was no strategy for how to generate value with analytics beyond those specific situations.

Meanwhile, a competitor began using advanced analytics to build a digital platform, partnering with other manufacturers in a broad ecosystem that enabled entirely new product and service categories. By tackling the company’s analytics opportunities in an unstructured way, the CEO achieved some returns but missed a chance to capitalize on this much bigger opportunity. Worse yet, the missed opportunity will now make it much more difficult to energize the company’s workforce to imagine what transformational opportunities lie ahead.

As with any major business initiative, analytics should have its own strategic direction.

First response: There are three crucial questions the CDO or CAO must ask the company’s business leaders:

- What threats do technologies such as AI and advanced analytics pose for the company?

- What are the opportunities to use such technologies to improve existing businesses?

- How can we use data and analytics to create new opportunities?

4. Analytics roles—present and future—are poorly defined

Few executives can describe in detail what analytics talent their organizations have, let alone where that talent is located, how it’s organized, and whether they have the right skills and titles.

In one large financial-services firm, the CEO was an enthusiastic supporter of advanced analytics. He was especially proud that his firm had hired 1,000 data scientists, each at an average loaded cost of $250,000 a year. Later, after it became apparent that the new hires were not delivering what was expected, it was discovered that they were not, by strict definition, data scientists at all. In practice, 100 true data scientists, properly assigned in the right roles in the appropriate organization, would have sufficed. Neither the CEO nor the firm’s human-resources group had a clear understanding of the data-scientist role—nor of other data-centric roles, for that matter.

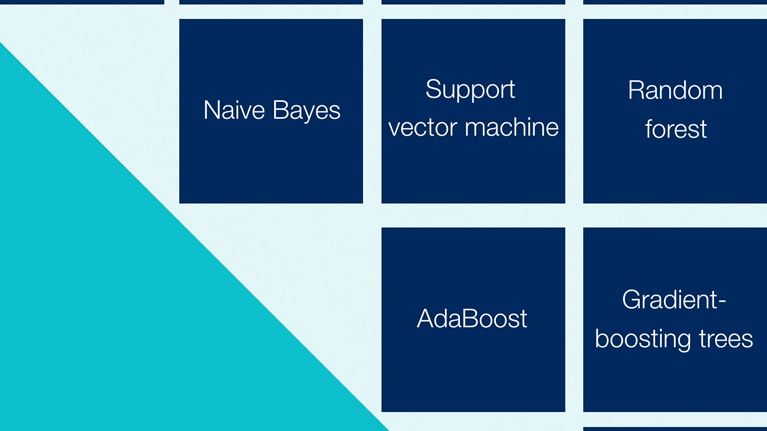

First response: The right way to approach the talent issue is to think about analytics talent as a tapestry of skill sets and roles (interactive). Naturally, many of these capabilities and roles overlap—some regularly, others depending on the project. Each thread of that tapestry must have its own carefully crafted definition, from detailed job descriptions to organizational interactions. The CDO and chief human resources officer (CHRO) should lead an effort to detail job descriptions for all the analytics roles needed in the years ahead. An immediate next step is to inventory all of those currently with the organization who could meet those job specifications. And then the next step is to fill the remaining roles by hiring externally.

5. The organization lacks analytics translators

If there’s one analytics role that can do the most to start unlocking value, it is the analytics translator. This sometimes overlooked but critical role is best filled by someone on the business side who can help leaders identify high-impact analytics use cases and then “translate” the business needs to data scientists, data engineers, and other tech experts so they can build an actionable analytics solution. Translators are also expected to be actively involved in scaling the solution across the organization and generating buy-in with business users. They possess a unique skill set to help them succeed in their role—a mix of business knowledge, general technical fluency, and project-management excellence.

First response: Hire or train translators right away. Hiring externally might seem like the quickest fix. However, new hires lack the most important quality of a successful translator: deep company knowledge. The right internal candidates have extensive company knowledge and business acumen and also the education to understand mathematical models and to work with data scientists to bring out valuable insights. As this unique combination of skills is hard to find, many companies have created their own translator academies to train these candidates. One global steel company, for example, is training 300 translators in a one-year learning program. At McKinsey, we’ve created our own academy, training 1,000 translators in the past few years.

6. Analytics capabilities are isolated from the business, resulting in an ineffective analytics organization structure

We have observed that organizations with successful analytics initiatives embed analytics capabilities into their core businesses. Those organizations struggling to create value through analytics tend to develop analytics capabilities in isolation, either centralized and far removed from the business or in sporadic pockets of poorly coordinated silos. Neither organizational model is effective. Overcentralization creates bottlenecks and leads to a lack of business buy-in. And decentralization brings with it the risk of different data models that don’t connect (Exhibit 3).

A definite red flag that the current organizational model is not working is the complaint from a data scientist that his or her work has little or no impact and that the business keeps doing what it has been doing. Executives must keep an ear to the ground for those kinds of complaints.

First response: The C-suite should consider a hybrid organizational model in which agile teams combine talented professionals from both the business side and the analytics side. A hybrid model will retain some centralized capability and decision rights (particularly around data governance and other standards), but the analytics teams are still embedded in the business and accountable for delivering impact.

For many companies, the degree of centralization may change over time. Early in a company’s analytics journey, it might make sense to work more centrally, since it’s easier to build and run a central team and ensure the quality of the team’s outputs. But over time, as the business becomes more proficient, it may be possible for the center to step back to more of a facilitation role, allowing the businesses more autonomy.

7. Costly data-cleansing efforts are started en masse

There’s a tendency for business leaders to think that all available data should be scrubbed clean before analytics initiatives can begin in earnest. Not so.

McKinsey estimates that companies may be squandering as much as 70 percent of their data-cleansing efforts. Not long ago, a large organization spent hundreds of millions of dollars and more than two years on a company-wide data-cleansing and data-lake-development initiative. The objective was to have one data meta-model—essentially one source of truth and a common place for data management. The effort was a waste. The firm did not track the data properly and had little sense of which data might work best for which use cases. And even when it had cleansed the data, there were myriad other issues, such as the inability to fully track the data or understand their context.

First response: Contrary to what might be seen as the CDO’s core remit, he or she must not think or act “data first” when evaluating data-cleansing initiatives. In conjunction with the company’s line-of-business leads and its IT executives, the CDO should orchestrate data cleansing on the data that fuel the most valuable use cases. In parallel, he or she should work to create an enterprise data ontology and master data model as use cases become fully operational.

8. Analytics platforms aren’t built to purpose

Some companies know they need a modern architecture as a foundation for their digital transformations. A common mistake is thinking that legacy IT systems have to be integrated first. Another mistake is building a data lake before figuring out the best ways to fill it and structure it; often, companies design the data lake as one entity, not understanding that it should be partitioned to address different types of use cases.

In many instances, the costs for such investments can be enormous, often millions of dollars, and they may produce meager benefits, in the single-digit millions. We have found that more than half of all data lakes are not fit for purpose. Significant design changes are often needed. In the worst cases, the data-lake initiatives must be abandoned.

That was the case with one large financial-services firm. The company tried to integrate its legacy data warehouses and simplify its legacy IT landscape without a clear business case for the analytics the data would fuel. After two years, the business began to push back as costs escalated, with no signs of value being delivered. After much debate, and after about 80 percent of the investment budget had been spent, the program screeched to a halt.

Breaking away: The secrets to scaling analytics

First response: In practice, a new data platform can exist in parallel with legacy systems. With appropriate input from the chief information officer (CIO), the CDO must ensure that, use case by use case, data ingestion can happen from multiple sources and that data cleansing can be performed and analytics conducted on the platform—all while the legacy IT systems continue to service the organization’s transactional data needs.

9. Nobody knows the quantitative impact that analytics is providing

It is surprising how many companies are spending millions of dollars on advanced analytics and other digital investments but are unable to attribute any bottom-line impact to these investments.

The companies that have learned how to do this typically create a performance-management framework for their analytics initiatives. At a minimum, this calls for carefully developed metrics that track most directly to the initiatives. These might be second-order metrics instead of high-level profitability metrics. For example, analytics applied to an inventory-management system could uncover the drivers of overstock for a quarter. To determine the impact of analytics in this instance, the metric to apply would be the percentage by which overstock was reduced once the problem with the identified driver was corrected.

Precisely aligning metrics in this manner gives companies the ability to alter course if required, moving resources from unsuccessful use cases to others that are delivering value.

First response: The business leads, in conjunction with translators, must be the first responders; it’s their job to identify specific use cases that can deliver value. Then they should commit to measuring the financial impact of those use cases, perhaps every fiscal quarter. Finance may help develop appropriate metrics; the function also acts as the independent arbiter of the performance of the use cases. Beyond that, some leading companies are moving toward automated systems for monitoring use-case performance, including ongoing model validation and upgrades.

10. No one is hyperfocused on identifying potential ethical, social, and regulatory implications of analytics initiatives

It is important to be able to anticipate how digital use cases will acquire and consume data and to understand whether there are any compromises to the regulatory requirements or any ethical issues.

One large industrial manufacturer ran afoul of regulators when it developed an algorithm to predict absenteeism. The company meant well; it sought to understand the correlation between job conditions and absenteeism so it could rethink the work processes that were apt to lead to injuries or illnesses. Unfortunately, the algorithms were able to cluster employees based on their ethnicity, region, and gender, even though such data fields were switched off, and it flagged correlations between race and absenteeism.

Luckily, the company was able to pinpoint and preempt the problem before it affected employee relations and led to a significant regulatory fine. The takeaway: working with data, particularly personnel data, introduces a host of risks from algorithmic bias. Significant supervision, risk management, and mitigation efforts are required to apply the appropriate human judgment to the analytics realm.

First response: As part of a well-run broader risk-management program, the CDO should take the lead, working with the CHRO and the company’s business-ethics experts and legal counsel to set up resiliency testing services that can quickly expose and interpret the secondary effects of the company’s analytics programs. Translators will also be crucial to this effort.

There is no time to waste. It is imperative that businesses get analytics right. The upside is too significant for it to be discretionary. Many companies, caught up in the hype, have rushed headlong into initiatives that have cost vast amounts of money and time and returned very little.

By identifying and addressing the ten red flags presented here, these companies have a second chance to get on track.